随着Google Play服务7.8的发布,谷歌引入了移动视觉API,可以让你进行人脸检测、条形码检测和文本检测。在本教程中,我们将开发一个Android人脸检测应用程序,让您可以在图像中检测人脸。

Android人脸检测

Android人脸检测API使用眼睛、鼻子、耳朵、脸颊和嘴巴等地标跟踪照片和视频中的人脸。API不是检测单个特征,而是一次检测人脸,然后如果定义了,则检测地标和分类。此外,该API还可以从不同角度检测人脸。

Android人脸检测-地标

界标是面部内的兴趣点。左眼、右眼和鼻根都是标志点的例子。以下是目前可以通过API找到的地标:

1.左眼和右眼 2.左耳和右耳 3.左右耳尖 4.鼻底 5.左右脸颊 6.嘴巴的左右角 7.口底

当使用‘Left’和‘Right’时,它们是相对于主语的。例如,LEFT_EY地标是对象的左眼,而不是查看图像时位于左侧的眼睛。

分类

分类确定是否存在特定的面部特征。Android Face API目前支持两种分类:

- 睁开眼睛 :使用

getIsLeftEyeOpenProbability()和getIsRightEyeOpenProbability()方法。 - 微笑 :使用

getIsSmilingProbability()方法。

人脸定位

面的方向是使用欧拉角确定的。这些是指脸部围绕X、Y和Z轴的旋转角度。-Euler Y 告诉我们脸部是向左看还是向右看。

- Euler Z 告诉我们面是否旋转/板条化

- Euler X 告诉我们人脸是向上还是向下(目前不支持)

注意 :如果无法计算概率,则设置为-1。让我们进入本教程的业务部分。我们的应用程序应包含一些示例图像以及捕捉自己的图像的功能。** 注意** :本接口仅支持人脸检测。当前的Mobile Vision API不提供人脸识别功能。

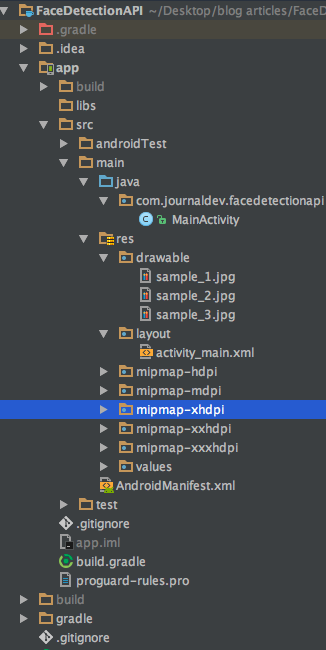

Android人脸检测示例项目结构

Android人脸检测码

在应用程序的build.gradle文件中添加以下依赖项。

1compile 'com.google.android.gms:play-services-vision:11.0.4'

在androidManifest.xml文件的应用程序标签内添加以下meta-dede,如下所示。

1<meta-data

2 android:name="com.google.android.gms.vision.DEPENDENCIES"

3 android:value="face"/>

这让Vision库知道您计划检测应用程序中的人脸。在androidManifest.xml的MANIFEST标签中为摄像头权限添加以下权限。

1<uses-feature

2 android:name="android.hardware.camera"

3 android:required="true"/>

4 <uses-permission

5 android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

active_main.xml布局文件的代码如下所示。

1<?xml version="1.0" encoding="utf-8"?>

2

3<ScrollView xmlns:android="https://schemas.android.com/apk/res/android"

4 xmlns:app="https://schemas.android.com/apk/res-auto"

5 xmlns:tools="https://schemas.android.com/tools"

6 android:layout_width="match_parent"

7 android:layout_height="match_parent">

8

9 <android.support.constraint.ConstraintLayout xmlns:app="https://schemas.android.com/apk/res-auto"

10 xmlns:tools="https://schemas.android.com/tools"

11 android:layout_width="match_parent"

12 android:layout_height="wrap_content"

13 tools:context="com.journaldev.facedetectionapi.MainActivity">

14

15 <ImageView

16 android:id="@+id/imageView"

17 android:layout_width="300dp"

18 android:layout_height="300dp"

19 android:layout_marginTop="8dp"

20 android:src="@drawable/sample_1"

21 app:layout_constraintLeft_toLeftOf="parent"

22 app:layout_constraintRight_toRightOf="parent"

23 app:layout_constraintTop_toTopOf="parent" />

24

25 <Button

26 android:id="@+id/btnProcessNext"

27 android:layout_width="wrap_content"

28 android:layout_height="wrap_content"

29 android:layout_marginTop="8dp"

30 android:text="PROCESS NEXT"

31 app:layout_constraintHorizontal_bias="0.501"

32 app:layout_constraintLeft_toLeftOf="parent"

33 app:layout_constraintRight_toRightOf="parent"

34 app:layout_constraintTop_toBottomOf="@+id/imageView" />

35

36 <ImageView

37 android:id="@+id/imgTakePic"

38 android:layout_width="250dp"

39 android:layout_height="250dp"

40 android:layout_marginTop="8dp"

41 app:layout_constraintLeft_toLeftOf="parent"

42 app:layout_constraintRight_toRightOf="parent"

43 app:layout_constraintTop_toBottomOf="@+id/txtSampleDescription"

44 app:srcCompat="@android:drawable/ic_menu_camera" />

45

46 <Button

47 android:id="@+id/btnTakePicture"

48 android:layout_width="wrap_content"

49 android:layout_height="wrap_content"

50 android:layout_marginTop="8dp"

51 android:text="TAKE PICTURE"

52 app:layout_constraintLeft_toLeftOf="parent"

53 app:layout_constraintRight_toRightOf="parent"

54 app:layout_constraintTop_toBottomOf="@+id/imgTakePic" />

55

56 <TextView

57 android:id="@+id/txtSampleDescription"

58 android:layout_width="match_parent"

59 android:layout_height="wrap_content"

60 android:layout_marginBottom="8dp"

61 android:layout_marginTop="8dp"

62 android:gravity="center"

63 app:layout_constraintBottom_toTopOf="@+id/txtTakePicture"

64 app:layout_constraintLeft_toLeftOf="parent"

65 app:layout_constraintRight_toRightOf="parent"

66 app:layout_constraintTop_toBottomOf="@+id/btnProcessNext"

67 app:layout_constraintVertical_bias="0.0" />

68

69 <TextView

70 android:id="@+id/txtTakePicture"

71 android:layout_width="wrap_content"

72 android:layout_height="wrap_content"

73 android:layout_marginTop="8dp"

74 android:gravity="center"

75 app:layout_constraintLeft_toLeftOf="parent"

76 app:layout_constraintRight_toRightOf="parent"

77 app:layout_constraintTop_toBottomOf="@+id/btnTakePicture" />

78

79 </android.support.constraint.ConstraintLayout>

80

81</ScrollView>

我们定义了两个ImageViews,文本视图和按钮。其中一个将循环显示样本图像并显示结果。另一个用于从camera](/community/tutorials/android-capture-image-camera-gallery).捕获[图像MainActivity.java文件代码如下所示。

1package com.journaldev.facedetectionapi;

2

3import android.Manifest;

4import android.content.Context;

5import android.content.Intent;

6import android.content.pm.PackageManager;

7import android.graphics.Bitmap;

8import android.graphics.BitmapFactory;

9import android.graphics.Canvas;

10import android.graphics.Color;

11import android.graphics.Paint;

12import android.net.Uri;

13import android.os.Environment;

14import android.provider.MediaStore;

15import android.support.annotation.NonNull;

16import android.support.v4.app.ActivityCompat;

17import android.support.v7.app.AppCompatActivity;

18import android.os.Bundle;

19import android.util.SparseArray;

20import android.view.View;

21import android.widget.Button;

22import android.widget.ImageView;

23import android.widget.TextView;

24import android.widget.Toast;

25

26import com.google.android.gms.vision.Frame;

27import com.google.android.gms.vision.face.Face;

28import com.google.android.gms.vision.face.FaceDetector;

29import com.google.android.gms.vision.face.Landmark;

30

31import java.io.File;

32import java.io.FileNotFoundException;

33

34public class MainActivity extends AppCompatActivity implements View.OnClickListener {

35

36 ImageView imageView, imgTakePicture;

37 Button btnProcessNext, btnTakePicture;

38 TextView txtSampleDesc, txtTakenPicDesc;

39 private FaceDetector detector;

40 Bitmap editedBitmap;

41 int currentIndex = 0;

42 int[] imageArray;

43 private Uri imageUri;

44 private static final int REQUEST_WRITE_PERMISSION = 200;

45 private static final int CAMERA_REQUEST = 101;

46

47 private static final String SAVED_INSTANCE_URI = "uri";

48 private static final String SAVED_INSTANCE_BITMAP = "bitmap";

49

50 @Override

51 protected void onCreate(Bundle savedInstanceState) {

52 super.onCreate(savedInstanceState);

53 setContentView(R.layout.activity_main);

54

55 imageArray = new int[]{R.drawable.sample_1, R.drawable.sample_2, R.drawable.sample_3};

56 detector = new FaceDetector.Builder(getApplicationContext())

57 .setTrackingEnabled(false)

58 .setLandmarkType(FaceDetector.ALL_CLASSIFICATIONS)

59 .setClassificationType(FaceDetector.ALL_CLASSIFICATIONS)

60 .build();

61

62 initViews();

63

64 }

65

66 private void initViews() {

67 imageView = (ImageView) findViewById(R.id.imageView);

68 imgTakePicture = (ImageView) findViewById(R.id.imgTakePic);

69 btnProcessNext = (Button) findViewById(R.id.btnProcessNext);

70 btnTakePicture = (Button) findViewById(R.id.btnTakePicture);

71 txtSampleDesc = (TextView) findViewById(R.id.txtSampleDescription);

72 txtTakenPicDesc = (TextView) findViewById(R.id.txtTakePicture);

73

74 processImage(imageArray[currentIndex]);

75 currentIndex++;

76

77 btnProcessNext.setOnClickListener(this);

78 btnTakePicture.setOnClickListener(this);

79 imgTakePicture.setOnClickListener(this);

80 }

81

82 @Override

83 public void onClick(View v) {

84 switch (v.getId()) {

85 case R.id.btnProcessNext:

86 imageView.setImageResource(imageArray[currentIndex]);

87 processImage(imageArray[currentIndex]);

88 if (currentIndex == imageArray.length - 1)

89 currentIndex = 0;

90 else

91 currentIndex++;

92

93 break;

94

95 case R.id.btnTakePicture:

96 ActivityCompat.requestPermissions(MainActivity.this, new

97 String[]{Manifest.permission.WRITE_EXTERNAL_STORAGE}, REQUEST_WRITE_PERMISSION);

98 break;

99

100 case R.id.imgTakePic:

101 ActivityCompat.requestPermissions(MainActivity.this, new

102 String[]{Manifest.permission.WRITE_EXTERNAL_STORAGE}, REQUEST_WRITE_PERMISSION);

103 break;

104 }

105 }

106

107 @Override

108 public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

109 super.onRequestPermissionsResult(requestCode, permissions, grantResults);

110 switch (requestCode) {

111 case REQUEST_WRITE_PERMISSION:

112 if (grantResults.length > 0 && grantResults[0] == PackageManager.PERMISSION_GRANTED) {

113 startCamera();

114 } else {

115 Toast.makeText(getApplicationContext(), "Permission Denied!", Toast.LENGTH_SHORT).show();

116 }

117 }

118 }

119

120 @Override

121 protected void onActivityResult(int requestCode, int resultCode, Intent data) {

122 if (requestCode == CAMERA_REQUEST && resultCode == RESULT_OK) {

123 launchMediaScanIntent();

124 try {

125 processCameraPicture();

126 } catch (Exception e) {

127 Toast.makeText(getApplicationContext(), "Failed to load Image", Toast.LENGTH_SHORT).show();

128 }

129 }

130 }

131

132 private void launchMediaScanIntent() {

133 Intent mediaScanIntent = new Intent(Intent.ACTION_MEDIA_SCANNER_SCAN_FILE);

134 mediaScanIntent.setData(imageUri);

135 this.sendBroadcast(mediaScanIntent);

136 }

137

138 private void startCamera() {

139 Intent intent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

140 File photo = new File(Environment.getExternalStorageDirectory(), "photo.jpg");

141 imageUri = Uri.fromFile(photo);

142 intent.putExtra(MediaStore.EXTRA_OUTPUT, imageUri);

143 startActivityForResult(intent, CAMERA_REQUEST);

144 }

145

146 @Override

147 protected void onSaveInstanceState(Bundle outState) {

148 if (imageUri != null) {

149 outState.putParcelable(SAVED_INSTANCE_BITMAP, editedBitmap);

150 outState.putString(SAVED_INSTANCE_URI, imageUri.toString());

151 }

152 super.onSaveInstanceState(outState);

153 }

154

155 private void processImage(int image) {

156

157 Bitmap bitmap = decodeBitmapImage(image);

158 if (detector.isOperational() && bitmap != null) {

159 editedBitmap = Bitmap.createBitmap(bitmap.getWidth(), bitmap

160 .getHeight(), bitmap.getConfig());

161 float scale = getResources().getDisplayMetrics().density;

162 Paint paint = new Paint(Paint.ANTI_ALIAS_FLAG);

163 paint.setColor(Color.GREEN);

164 paint.setTextSize((int) (16 * scale));

165 paint.setShadowLayer(1f, 0f, 1f, Color.WHITE);

166 paint.setStyle(Paint.Style.STROKE);

167 paint.setStrokeWidth(6f);

168 Canvas canvas = new Canvas(editedBitmap);

169 canvas.drawBitmap(bitmap, 0, 0, paint);

170 Frame frame = new Frame.Builder().setBitmap(editedBitmap).build();

171 SparseArray<Face> faces = detector.detect(frame);

172 txtSampleDesc.setText(null);

173

174 for (int index = 0; index < faces.size(); ++index) {

175 Face face = faces.valueAt(index);

176 canvas.drawRect(

177 face.getPosition().x,

178 face.getPosition().y,

179 face.getPosition().x + face.getWidth(),

180 face.getPosition().y + face.getHeight(), paint);

181

182 canvas.drawText("Face " + (index + 1), face.getPosition().x + face.getWidth(), face.getPosition().y + face.getHeight(), paint);

183

184 txtSampleDesc.setText(txtSampleDesc.getText() + "FACE " + (index + 1) + "\n");

185 txtSampleDesc.setText(txtSampleDesc.getText() + "Smile probability:" + " " + face.getIsSmilingProbability() + "\n");

186 txtSampleDesc.setText(txtSampleDesc.getText() + "Left Eye Is Open Probability: " + " " + face.getIsLeftEyeOpenProbability() + "\n");

187 txtSampleDesc.setText(txtSampleDesc.getText() + "Right Eye Is Open Probability: " + " " + face.getIsRightEyeOpenProbability() + "\n\n");

188

189 for (Landmark landmark : face.getLandmarks()) {

190 int cx = (int) (landmark.getPosition().x);

191 int cy = (int) (landmark.getPosition().y);

192 canvas.drawCircle(cx, cy, 8, paint);

193 }

194

195 }

196

197 if (faces.size() == 0) {

198 txtSampleDesc.setText("Scan Failed: Found nothing to scan");

199 } else {

200 imageView.setImageBitmap(editedBitmap);

201 txtSampleDesc.setText(txtSampleDesc.getText() + "No of Faces Detected: " + " " + String.valueOf(faces.size()));

202 }

203 } else {

204 txtSampleDesc.setText("Could not set up the detector!");

205 }

206 }

207

208 private Bitmap decodeBitmapImage(int image) {

209 int targetW = 300;

210 int targetH = 300;

211 BitmapFactory.Options bmOptions = new BitmapFactory.Options();

212 bmOptions.inJustDecodeBounds = true;

213

214 BitmapFactory.decodeResource(getResources(), image,

215 bmOptions);

216

217 int photoW = bmOptions.outWidth;

218 int photoH = bmOptions.outHeight;

219

220 int scaleFactor = Math.min(photoW / targetW, photoH / targetH);

221 bmOptions.inJustDecodeBounds = false;

222 bmOptions.inSampleSize = scaleFactor;

223

224 return BitmapFactory.decodeResource(getResources(), image,

225 bmOptions);

226 }

227

228 private void processCameraPicture() throws Exception {

229 Bitmap bitmap = decodeBitmapUri(this, imageUri);

230 if (detector.isOperational() && bitmap != null) {

231 editedBitmap = Bitmap.createBitmap(bitmap.getWidth(), bitmap

232 .getHeight(), bitmap.getConfig());

233 float scale = getResources().getDisplayMetrics().density;

234 Paint paint = new Paint(Paint.ANTI_ALIAS_FLAG);

235 paint.setColor(Color.GREEN);

236 paint.setTextSize((int) (16 * scale));

237 paint.setShadowLayer(1f, 0f, 1f, Color.WHITE);

238 paint.setStyle(Paint.Style.STROKE);

239 paint.setStrokeWidth(6f);

240 Canvas canvas = new Canvas(editedBitmap);

241 canvas.drawBitmap(bitmap, 0, 0, paint);

242 Frame frame = new Frame.Builder().setBitmap(editedBitmap).build();

243 SparseArray<Face> faces = detector.detect(frame);

244 txtTakenPicDesc.setText(null);

245

246 for (int index = 0; index < faces.size(); ++index) {

247 Face face = faces.valueAt(index);

248 canvas.drawRect(

249 face.getPosition().x,

250 face.getPosition().y,

251 face.getPosition().x + face.getWidth(),

252 face.getPosition().y + face.getHeight(), paint);

253

254 canvas.drawText("Face " + (index + 1), face.getPosition().x + face.getWidth(), face.getPosition().y + face.getHeight(), paint);

255

256 txtTakenPicDesc.setText("FACE " + (index + 1) + "\n");

257 txtTakenPicDesc.setText(txtTakenPicDesc.getText() + "Smile probability:" + " " + face.getIsSmilingProbability() + "\n");

258 txtTakenPicDesc.setText(txtTakenPicDesc.getText() + "Left Eye Is Open Probability: " + " " + face.getIsLeftEyeOpenProbability() + "\n");

259 txtTakenPicDesc.setText(txtTakenPicDesc.getText() + "Right Eye Is Open Probability: " + " " + face.getIsRightEyeOpenProbability() + "\n\n");

260

261 for (Landmark landmark : face.getLandmarks()) {

262 int cx = (int) (landmark.getPosition().x);

263 int cy = (int) (landmark.getPosition().y);

264 canvas.drawCircle(cx, cy, 8, paint);

265 }

266

267 }

268

269 if (faces.size() == 0) {

270 txtTakenPicDesc.setText("Scan Failed: Found nothing to scan");

271 } else {

272 imgTakePicture.setImageBitmap(editedBitmap);

273 txtTakenPicDesc.setText(txtTakenPicDesc.getText() + "No of Faces Detected: " + " " + String.valueOf(faces.size()));

274 }

275 } else {

276 txtTakenPicDesc.setText("Could not set up the detector!");

277 }

278 }

279

280 private Bitmap decodeBitmapUri(Context ctx, Uri uri) throws FileNotFoundException {

281 int targetW = 300;

282 int targetH = 300;

283 BitmapFactory.Options bmOptions = new BitmapFactory.Options();

284 bmOptions.inJustDecodeBounds = true;

285 BitmapFactory.decodeStream(ctx.getContentResolver().openInputStream(uri), null, bmOptions);

286 int photoW = bmOptions.outWidth;

287 int photoH = bmOptions.outHeight;

288

289 int scaleFactor = Math.min(photoW / targetW, photoH / targetH);

290 bmOptions.inJustDecodeBounds = false;

291 bmOptions.inSampleSize = scaleFactor;

292

293 return BitmapFactory.decodeStream(ctx.getContentResolver()

294 .openInputStream(uri), null, bmOptions);

295 }

296

297 @Override

298 protected void onDestroy() {

299 super.onDestroy();

300 detector.release();

301 }

302}

从上述代码中得出的几个推论如下:

ImageArray保存当点击[下一步处理]按钮时将扫描人脸的样本图像。- 检测器使用以下代码片段实例化:

1FaceDetector检测器=新的FaceDetector.Builder(getContext())

2.setTrackingEnabled(False)

3.setLandmarkType(FaceDetector.ALL_LANDARKS)

4.setMode(FaceDetector.FAST_MODE)

5.Build();

6`标记加起来等于计算时间,因此需要显式设置它们。人脸检测器可根据需要设置为`FAST_MODE`或`ACCURATE_MODE`。我们在上面的代码中将跟踪设置为FALSE,因为我们处理的是静止图像。如果要检测视频中的人脸,可以将其设置为True。

7* `cessImage()`和`cessCameraPicture()`方法包含我们实际检测人脸并在其上绘制矩形的代码

8* `Detector.isOperational()`用于检查您手机中当前的Google Play服务库是否支持Vision API(如果不支持,Google Play将下载所需的本地库以允许支持)。

9* 实际执行人脸检测工作的代码片段为:

Frame Frame=新Frame.Builder().setBitmap(editedBitmap).build(); SparseArrayFaces=Detector.Detect(帧);

1* 一旦检测到,我们循环`faces`数组以找到每个人脸的位置和属性。

2* 每个面的属性都附加在按钮下方的文本视图中。

3* 摄像头采集图像时同样如此,只是我们需要在运行时请求摄像头权限,并保存摄像头应用程序返回的uri,即位图。

4

5运行中的上述应用程序的输出如下所示。尝试捕捉一只狗的照片,你会发现Vision接口没有检测到它的脸(接口只检测人脸)。本教程到此结束。您可以通过下面的链接下载最终的**Android人脸检测API项目** 。

6

7[下载安卓人脸检测Project](https://journaldev.nyc3.digitaloceanspaces.com/android/AndroidFaceDetection.zip)

8

9参考资料:[官方Documentation](https://developers.google.com/vision/)